Today, time as we know it is kept via an intricate network of approximately 400 atomic clocks operating worldwide. Recently, however, scientists have developed a timekeeping system that may one day make the atomic clock obsolete.

But before we get to that, let’s first take a look at time itself. Fundamentally speaking, time is measured by tracking the intervals of something happening repeatedly, e.g. the swings of a pendulum in a grandfather clock. The less variation there is within a timekeeping system, the greater the resulting accuracy. A second was once defined as 1/86,400 of the mean solar day, but due to irregularities in the Earth’s rotation it was eventually deemed an imprecise way to measure time. Nevertheless, it was the definition used by astronomers well into the ‘50s. Atomic clocks came about when scientists realized that time could be measured more precisely by tracking the movements of something more consistent and not so influenced by outside forces. That something was the atom.

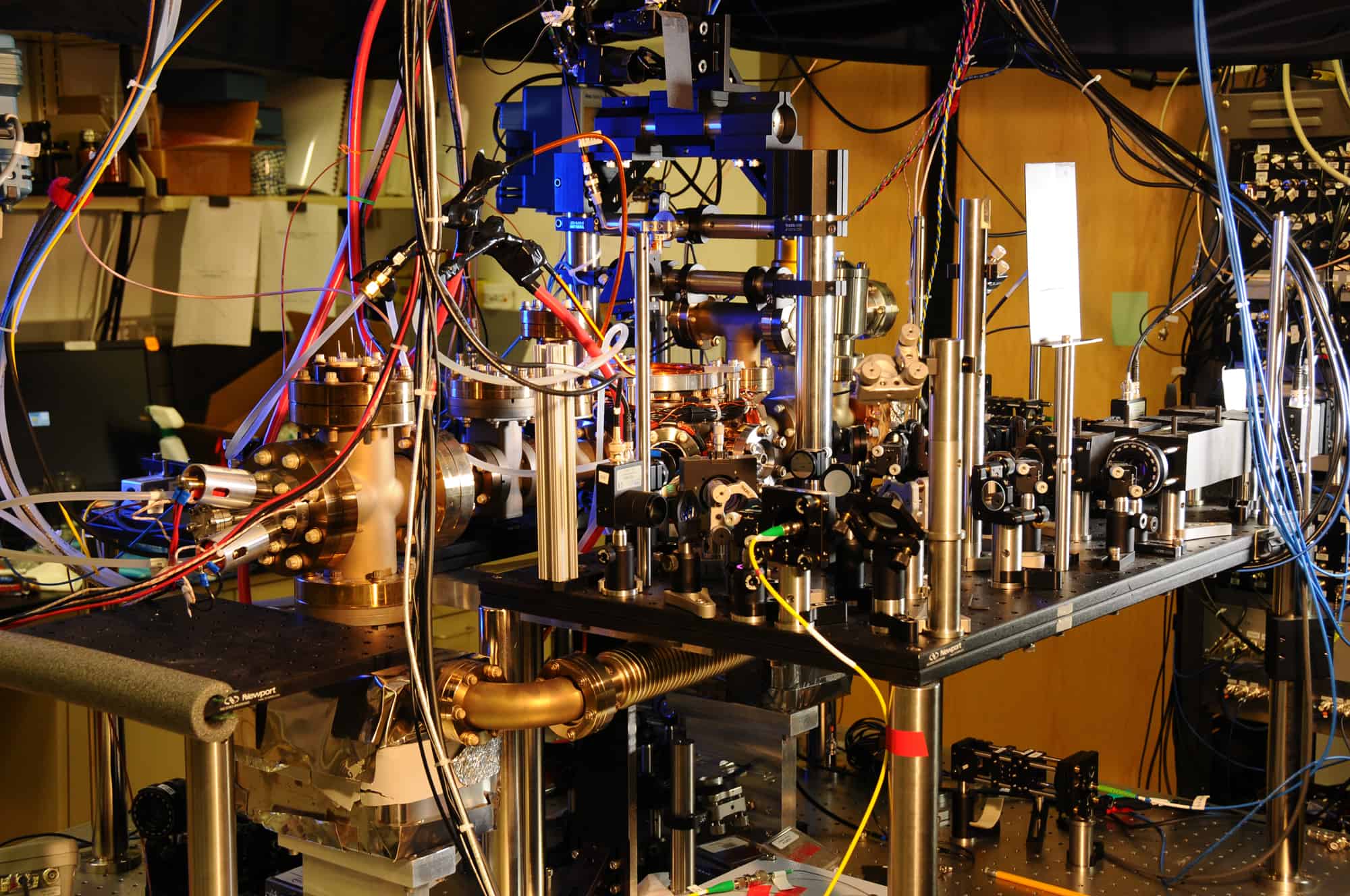

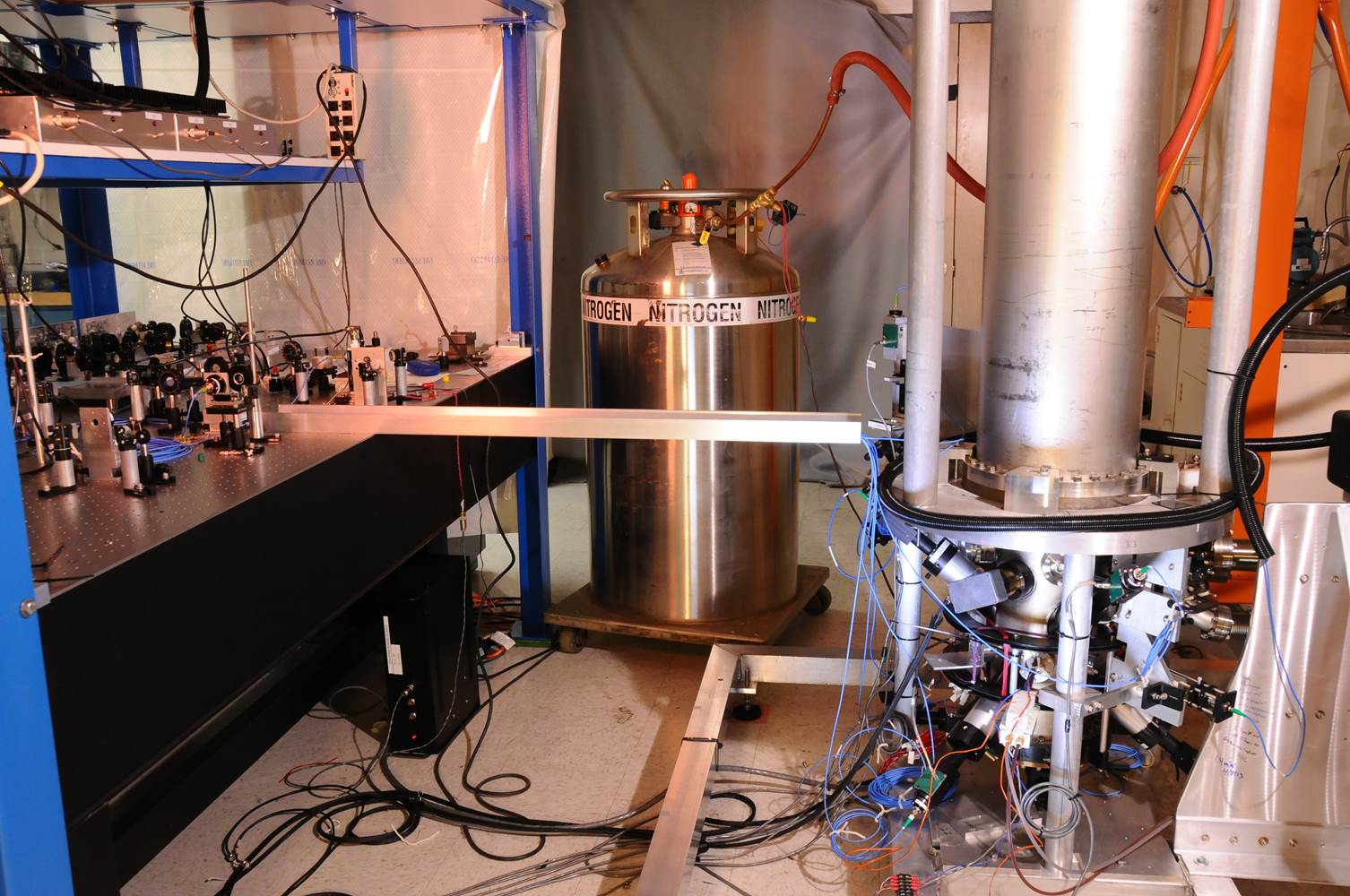

Because all atoms inherently have oscillation frequencies defined by the mechanical nature of the atom itself, atomic clocks are based on the observable oscillations of substances on an atomic scale, specifically the transition between two energy states of an atom. Atomic clocks have existed in one form or another since the late ‘40s, but it wasn’t until we began to use cesium 133–an isotope of the element cesium–as our oscillation source that we achieved the accuracy we see today with modern atomic clocks (there are some atomic clocks that feature less precise designs based on hydrogen and rubidium.)

Atomic clocks do not rely on atomic decay, so they’re not radioactive. Rather, they measure the number of times the atom switches between states to relay time, and since 1967 the International System of Units has defined the second as the time that elapses during 9,192,631,770 cycles of radiation that is produced by the transition of cesium 133 between two energy levels.

Featured Videos

Featured Videos